While organizations grapple with the digital transformation imperative, tectonic shifts are also underway in the IT provider community as companies come to recognize data, not things, as the key source of business value for today and tomorrow. In the IT industry, this shift is occurring at the top – startups are building disruptive and highly profitable data-driven business models – and at the foundational level, as chip makers begin to reinvent around the analysis and exchange of data. At a Data-Centric Innovation Day held in San Francisco this month, even 50-year-old semiconductor firm Intel reaffirmed its intent, announced two years back, to follow the data. According to Navin Shenoy, EVP and GM for Intel’s Data Centre Group, as “the first truly data centric launch in our history” this day was especially significant: if Intel has historically delivered the CPU foundation for the industry, with announcements made at the event, he noted, the company “moves beyond CPU” to a portfolio needed to address growing requirements to “move faster, store more, and process everything.”

In Shenoy’s vision, there are three mega trends driving change in the industry: cloud, AI, and a cloudification of the network and the edge that he predicts will accelerate as 5G emerges. Reaching into Intel’s research arsenal, he cited third party research predicting a 50 percent CAGR increase (2017-21) in compute cycles, an explosion of compute demand that is further complicated by the diversification of workload needs. These twin challenges – data growth and the growing variety of infrastructure demand – are at the heart of Intel’s new product announcements. Intel is investing, Shenoy explained, in a new approach to data-centric infrastructure, architecting the future of the data centre and the edge with innovations across the range of general-purpose and purpose-built processors, aimed at high-growth workload targets. Altogether, Intel announced eleven updates, including key items outlined below.

- The release of 2nd-Generation Intel Xeon Scalable processors (Cascade Lake family) that deliver enhancements in performance, AI inference, network functions, persistent memory bandwidth and security. Notably, these feature the integration of Intel Deep Learning Boost (Intel DL Boost) technology, which is optimized to accelerate AI inference workloads like image-recognition, object-detection and image-segmentation within data center, enterprise and intelligent-edge computing environments. The processors also support Intel Optane DC persistent memory, which allows customers to move more data into memory, to derive insights from their data more quickly. According to Intel, when combined with traditional DRAM in an eight-socket system, the platform delivers up-to 36TB of system-level memory capacity, a three-fold increase over system memory capacity in the previous generation of the Xeon Scalable processor.

- Several new processor families aimed at specific workloads, including:

- the 56-core, 12 memory channel Intel Xeon Platinum 9200processor designed for a variety of high-performance computing (HPC) workloads, AI applications and high-density infrastructure.

- network-optimized Intel Xeon Scalable processors built with communications service providers to deliver more subscriber capacity and reduce bottlenecks in network function virtualized (NFV) infrastructure.

- the Intel Xeon D-1600 processor, a system-on-chip (SoC) designed for dense environments where power and space are limited. This SoC is designed to support customers who are looking to 5G and to extend Intel’s solutions to the intelligent edge.

- the Intel Agilex FPGA (field programmable gate array) family of next-generation of 10nm FPGAs, built to enable transformative applications in edge computing, networking (5G/NFV) and data centers. The Agilex FPGA family enables application-specific optimization and customization to deliver greater flexibility and agility to data-intensive infrastructure.

The high-end capabilities built into these new compute platforms beg the question, ‘who’s buying’? Commenting the applicability of these platforms to the Canadian market, country manager for Intel Canada Denis Gaudreault observed that the Canadian market is also in transition: “the [historical] gap between Canada and the US in terms of the adoption of advanced products is shrinking, as Canadian companies in several sectors have begun to develop their strategies around digital transformation.” Sixth-largest on a global basis, Canada represents an important market for Intel, and Gaudreault believes that no matter the organization, static IT infrastructure will no longer be capable of managing the growth in data traffic or the growing need for greater flexibility and agility. He also pointed to the potential cost benefits available in the new platforms: “The products we announced – the Xeon Platinum 9200 or the Optane memory – will allow customers, such as the telcos or the government of Canada through Shared Services Canada, to enable more workloads, to put far more VMs on far fewer machines. They seem like super high-end products, but they provide more flexibility to move storage, and support more firewalls, and load balancing. Using these CPUs, this can all be done on standard Intel based servers.” In another example, he pointed to the advantages of in-memory storage: “So we expect to engage with pretty much all customers who are using CPNI (customer proprietary network information) in the delivery of web or Internet services. [With Optane], the customer will have a bigger in-memory database that costs less than using DRAM (dynamic random-access memory). In addition to better costs, Optane will deliver faster response to provide better SLAs to customers.”

From chip to solution

Beyond individual product enhancements, however, Intel took pains at the event to stress its new approach to product development. As Gaudreault explained, “Intel investment, and focus in R&D and development is now shifting from a product pure CPU level to what we call real workload performance that is more customer centric. So we innovate by looking at all the things that matter to performance improvement for specific customers and not just CPU: we consider architecture, the packaging, memory, interconnects, security, software and AI across virtually all verticals.”

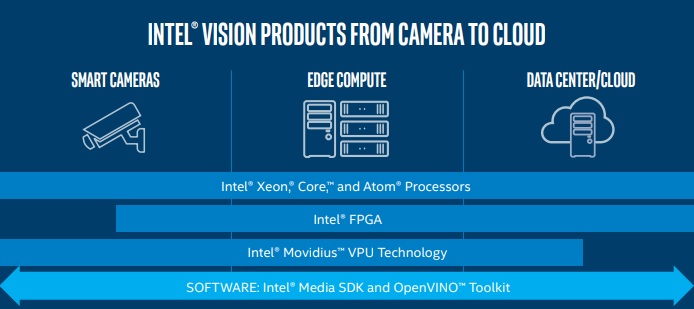

Described by Shenoy as “innovation at the solution level,” particularly in AI, virtualization and he edge, this investment focus involves increasing investment in software, solutions and systems level thinking. And it is being delivered in multiple ways. On the IoT front, for example, Gaudreault noted that Intel now has portfolio that addresses edge to data centre needs (though not sensors), and that for distribution, it has market ready solutions. “We work with partners and OEMs that use Intel technology to solve for different customer challenges, such as smart transportation, smart cities, smart buildings. We work with these to produce and package solutions that are already fully integrated and ready for a systems integrator or a smaller partner who will get it from a solutions partner.” Building on a legacy of developing end user architectures and reference models that serve as a foundation for implementation, Intel works on pre-integrated solutions with a goal of speeding IoT deployment.

Another path involves a software-defined approach to integrating and managing diverse Intel platforms that is available to a broader community. Edge computing provides a good example of this alternative, open approach. Driven by the digitization of new industries and environments, such as manufacturing, where latency needs may dictate more local computing, distributed computing – commonly referred to as edge – is receiving greater attention. In many IoT scenarios, a cloud network is evolving in which intelligence may reside at various points in the network, including the edge device, access networks, edge nodes, the gateway, core networks, and the cloud/data centre. In the Intel’s view, AI is a growing requirement across this cloud network. As Gary Brown, director AI marketing, Intel IoT Group, explained, “there is a lot of interest in using AI, specifically for deploying low power edge inference, a lot of interest in deploying AI algorithms for use in devices at the edge.” However, AI is not limited to the edge, rather, there is a “continuum” of potential application: “Think of a continuum from the end point sensors to the edge computing nodes (gateways) to the network infrastructure and the data centre, a/k/a cloud. Intel has devices that support AI across the whole spectrum,” Brown noted. “The interesting thing about Intel’s data centric business is that while there is data being processed at all of those different parts of the network, customers will have different ways of partitioning their intelligence.”

To illustrate, Brown pointed to the new high end, Xeon scalable second-generation processor, which now contains an instruction set that has been enhanced for AI to support recommendation engines, a popular application delivered by many service providers. Since these providers service millions of users with high performance software, this must run in the data centre. At the edge, however, are low power requirements and the need for specific, purpose-built solutions. As Brown explained, Intel has a range of platforms to address edge needs, including: low power Intel Core CPUs for simple AI used in applications such as facial recognition at the local level; Core CPU processors with an embedded GPU for optimizing some algorithms that may be deployed at the gateway level; low power CPUs, such as the Movidius VPU, which runs on several watts of power or less and is optimized for computer vision and deep learning inference; or in more sophisticated AI applications, dedicated platforms such as the Intel FPGA technology (acquired with Altera back in 2015), which can be customized to specific applications; and the Mobileye platform, which supports computer vision in software stacks for autonomous driving applications.

According to Brown, one or several of these CPUs could end up in an application; in the case of camera security on a college campus, for example, there would be a number of those CPUs in a fairly small edge server that could run on premise. But the notion of ‘continuum’ can also be applied to AI capabilities, which could range from clock work thresholds, to ML where decisions are made, and ultimately to the generation of new questions that are asked without human intervention – which each require different levels of CPU. This variety of need – based on application and location within cloud networks – has led to Intel’s more flexible approach. As Brown explained, historically, Intel built to a customer device: “the chip fits into the device and there you go.” Today, customers are developing more sophisticated configurations that may need multiple solutions, which, at the very least, will co-exist with AI. As a result, Intel has begun to look at a high level at software needs, at functionality and at the value in the system from the end user perspective – and built the stack from there. “When you start to look down into the stack, you find we are supporting a lot of different things. It’s not just one chip – either Xeon or a Movidius VPU. We put together reference designs, and solutions that are a combination of the different chip solutions that we have. The customer that may be using a CPU today, may need more CPU performance for AI. And so we add ‘accelerators’ – a plug-in card that has multiple Movidius VPUs because the solution now requires more channels for video analytics.”

Connecting the chips

To unify these disparate platforms, Intel now offers middleware, which Brown described as “where the exciting transformation is occurring” within the company. A year ago, to integrate various platforms and system level software, the company launched a software package for IoT called OpenVINO (open visual inference and neural network optimization), which is now available as an open source and in an Intel distribution. Essentially, the free toolkit that allows customers to freely port their algorithms or their deep neural network inference algorithms to any one of the Intel platforms, and to optimize the application and then map it to whatever silicon they choose to improve performance. “You can decide when you are on your laptop that you want to run some neural networks.” Brown explained “You run OpenVINO and then run them on a laptop with a CORE i5 CPU, or on another CPU – for example, on a PC supporting digital signage which has accelerators for doing AI.” With OpenVINO, the customer can develop a software solution, and then – once the software is complete – decide which silicon platform is most appropriate: Intel CPUs, Intel FPGAs or Intel vision products like Movidius VPUs. As Brown noted, this is a much more flexible way to build systems. “We can’t tell customers which device should have which AI algorithm, or whether AI belongs in the data centre or at the edge. Customers have to decide how they will partition the intelligence in their applications, and how they will want to consume all the intelligence that is being generated. But we can support them with tools like OpenVINO that help them map their algorithms to run better in the cloud, or in Xeon, or at the edge, or on a very small Movidius VPU edge device. This where the stack at Intel is now being defined in a really interesting way.”

Beyond customer build, Brown noted that OpenVINO provides an opportunity for third-parties to build the customizations needed in defining cloud network, IoT and edge solutions. An ecosystem of software vendors or ISVs that use OpenVINO tools to optimize their software would expand the potential for customers to integrate ISV code with their own OpenVINO optimized applications. This could deliver a win-win-win scenario: for customers looking to orchestrate data, applications and hardware, and for third parties looking to define a ready base of client ecosystem partners, or for Intel channel partners looking to add value to platform sales. How do standards grow?