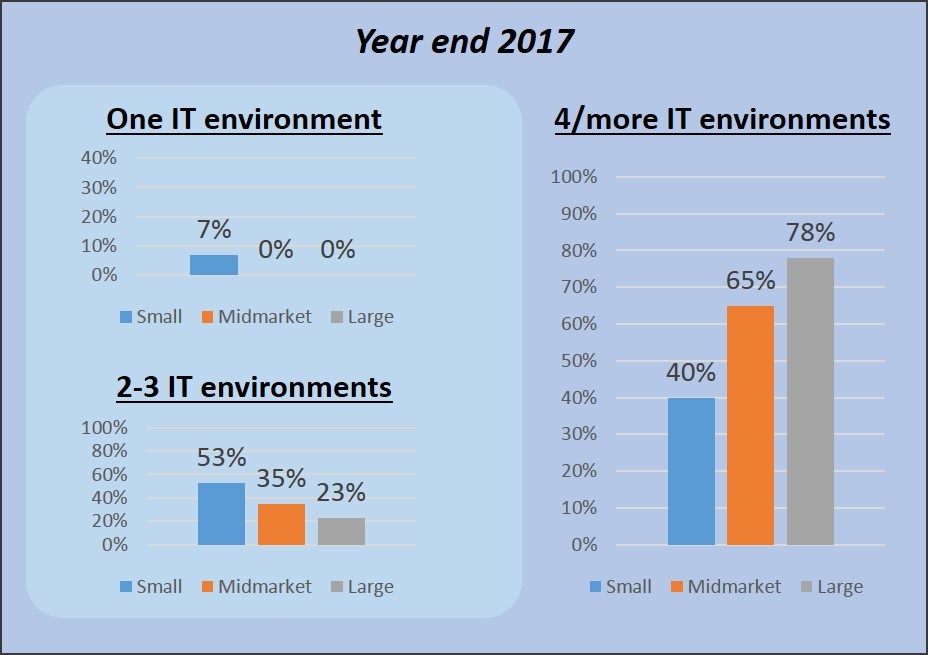

Cloud computing is a done deal. Virtually all organizations now use cloud in one form or another: the value proposition of SaaS is no longer a talking point, rather it is a delivery standard that has penetrated businesses of all sizes, and similarly, PaaS and IaaS are increasing their toehold in the Canadian marketplace, accelerated by the recent entre of several global public cloud providers to Canadian territory. But if cloud benefits are now well understood, transition to cloud continues to be a work in progress as Canadian businesses demonstrate an ongoing commitment to a multiplicity of computing platforms. “Enterprise tech” increasingly means “hybrid” IT is the conclusion of InsightaaS, which has forecast roughly equivalent spending for on-premise and XaaS IT by 2020, as well as the use of four or more varieties of IT environment (on-premise, public/private cloud, hosted infrastructure or remote private cloud, colo) by a clear majority of Canadian enterprises by the end of 2017.

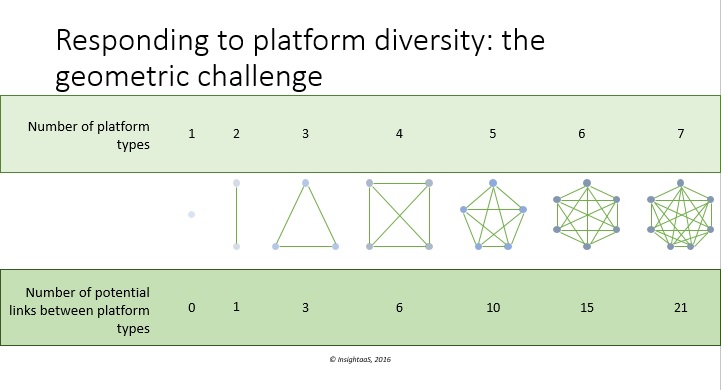

With its tidy phrasing, “Hybrid IT” suggests a compute paradigm that is well in hand. But if hybrid deployment is the preferred approach to accommodate a business’s parallel need to exploit cloud efficiencies and to support legacy apps that cannot move to cloud or mission critical/sensitive data that shouldn’t, it is also one of the most challenging to manage. This complexity is due in large part to the need for connecting clouds, and cloud to on-premise infrastructure. In his discussions on IT infrastructure with Canadian businesses, Peter Near, national director of systems engineering for VMware Canada, has found cloud networking to be top of mind: “A lot of the conversations that I’m having with customers at a strategic level have to do with cloud in some way. Often, we talk about private cloud – how you bring in cloud models and cloud economics to your on-premise data centre. But more frequently, we talk about how companies can move to the public cloud and how they can start to think of hybrid cloud models. Networking is always a part of that conversation.” In the InsightaaS figure below, some of the challenge in managing these connections between multiple platforms is outlined.

In this figure, the growing complexity involved in building network links between increasing numbers of platforms in a hybrid environment is shown at a high level; in real life IT, the intricacy of these linkages may be further complicated by several factors, including multiple apps in one “platform” category, multiple sites with thousands of users, the need to connect with customers and to partner sites and the need to ensure network security and performance throughout. To illustrate some of the real-life network challenge cloud users may need to overcome, Near offered a concrete example that is also one of the most prevalent cloud use cases. In disaster recovery (DR), the business will typically have an on-premise data centre running, as well as some space reserved in a public cloud for disaster recovery. If an emergency occurs, and the business needs to access its DR resources, one of the prerequisite steps will be rerouting the entire network to support use of the DR site. According to Near: “this means ensuring all the firewall rules are kept up to date between those two sites, and potentially remapping IP addresses. Though DR may be a well understood concept, there is still a lot of manual, or script-based work that needs to happen in order that the business be able to actually respond to a disaster.” Drawing on an historical metaphor, Near noted “If you think back to the early telco days, when someone would unplug a wire from one place and plug it in to another to re-route the call, similar things need to happen when you are recovering from a disaster – you will have to map applications that may have been going to site A that now need to go to site B, and manage all the trickle-down effects as well to keep your applications running.”

In this figure, the growing complexity involved in building network links between increasing numbers of platforms in a hybrid environment is shown at a high level; in real life IT, the intricacy of these linkages may be further complicated by several factors, including multiple apps in one “platform” category, multiple sites with thousands of users, the need to connect with customers and to partner sites and the need to ensure network security and performance throughout. To illustrate some of the real-life network challenge cloud users may need to overcome, Near offered a concrete example that is also one of the most prevalent cloud use cases. In disaster recovery (DR), the business will typically have an on-premise data centre running, as well as some space reserved in a public cloud for disaster recovery. If an emergency occurs, and the business needs to access its DR resources, one of the prerequisite steps will be rerouting the entire network to support use of the DR site. According to Near: “this means ensuring all the firewall rules are kept up to date between those two sites, and potentially remapping IP addresses. Though DR may be a well understood concept, there is still a lot of manual, or script-based work that needs to happen in order that the business be able to actually respond to a disaster.” Drawing on an historical metaphor, Near noted “If you think back to the early telco days, when someone would unplug a wire from one place and plug it in to another to re-route the call, similar things need to happen when you are recovering from a disaster – you will have to map applications that may have been going to site A that now need to go to site B, and manage all the trickle-down effects as well to keep your applications running.”

The time and effort involved in these processes will no doubt vary with the size and complexity of the organization itself – in DR, as in other areas in IT, there is not really a “typical enterprise.” In the case of disaster, Near explained, business continuity will depend on the business having the right people available to go through complex run books for different teams and different technology silos and these will work to ensure that recovered IT components all come together to work correctly. There’s likely some automation in each of those silos to help, he added – VMware’s Site Recovery Manager, for example, can move many of the virtual servers over and make sure that these are started up in the right order, and there would likely be similar approaches in place on the storage and networking sides of DR. On the networking front, ongoing work is needed to ensure that the primary site and the secondary site match – a firewall rule in the primary site will need also to be in place in the DR site. According to Near, “In the best case, it’s the same firewall and the same network; in the more likely case, your primary site and your DR site will be different, especially for the Tier 2 type of application. You may have one type of hardware on your primary site and another type of hardware on the DR site, so there’s a lot of manual effort involved in making sure those firewall and those routing rules match.” While many organizations will no doubt have the staff and processes in place for their critical Tier 1 applications (ERP would be kept well up to data as far as DR procedures are concerned), there might not be as much scrutiny on processes like testing for Tier 2 applications so response to a disaster for email, customer service applications, etc., may not be as timely.

At a more complex level, when public cloud is part of the organization’s overall infrastructure operation, network policies, security policies, and simply how things flow from one part of the network to another becomes even more challenging. Difficulties often occur, Near noted, because the on-premise data centre will likely have hardware in place to manage very specialized functions, but mapping hardware to concepts that are very different in the cloud, or maybe don’t even exist in the cloud, can become problematic: “I see a lot of customers say, ‘I want to get to a hybrid cloud, but the networking piece and the way I have done networking in the past is getting in my way’.” For example, if the organization has a security appliance deployed in a private data centre that is monitoring for malicious activity in the network, extending that capability, or putting that same hardware out to the public cloud may not be possible.

Performance and troubleshooting is another problematic circle to square in complex hybrid environments. In traditional data centre, the typical approach to issues with application performance is to pull in all the specialists that might have touch points into an application – the storage team would investigate on the storage side, the networking team would look at what’s going on from a networking perspective, and the server team would also be brought in to try to solve the problem. But as Near explained, when infrastructure has expanded to multiple public clouds, visibility into applications changes significantly because external teams are involved, and because there are items in the public cloud that are so abstracted the customer simply doesn’t have visibility: “So the answer to the question ‘why is my business-critical application not working, or not working as well as it might be?’ is often ‘I don’t know.’”

To help, VMware has been working to extend visibility into the network as traffic moves into the public cloud – a critical requirement for cloud users, and a key priority for the company which has demonstrated its recognition of cloud relevance through relationships with IBM and Amazon, in which the VMware capabilities have been deployed to SoftLayer and to AWS. As Near explained, visibility into the network layer in cloud is “absolutely necessary” to monitor network flow that goes through an application and potentially to multiple clouds, and to check performance and notify when and where there is an issue. And to do this well, it’s necessary to provide visibility across both physical and virtual components of the network. “We can give you visibility into your software defined networks, whether they be private cloud or public cloud,” he noted, and “we can also see when that is hopping out to your physical networks. By combining physical networks with SDN, we can highlight any security vulnerabilities that exist across that entire hybrid cloud environment and help with troubleshooting across the entire hybrid network.”

As example, Near described requirements for monitoring a PoS system. To understand why point of sale might be running slowly on Boxing Day, the flow of traffic would have to be monitored as it moves from a particular store, across a WAN, into a perimeter network, into a software defined network within the local data centre, where there may be traffic that flows between a web server and database server, and potentially out into a customer account system. In a SDN world, he argued, more information is available because it’s possible to see what policies were affected and what happened in SDN, but also to access information from across that entire data path: “so, for example, traffic flowed through this firewall, and had these policies applied to it before it went to the next step. We can even go back in time to say the performance issues started around 3am and then rollback the clock to see what was happening with the entire network at that time. That kind of visibility across all these things – physical, software defined and cloud – provided by vRealize Network Insight is really powerful.”

In hybrid IT, creating security continuity across IT environments takes on added weight. As noted above, it can be difficult to replicate on-premise security capability across platforms; however, broad accessibility to a shifting cloud also challenges traditional perimeter defense strategies. As Near explained, “You can expand your perimeter to include the cloud and put in specific network fences around the cloud so that you have a wider perimeter, but the reality, given that a lot of your applications are interacting directly with your customers or with your business partners is that that perimeter is permeable. There are a lot of people coming in and out of that perimeter: it’s still important to make sure that the perimeter is well defined but you need to assume that there are bad actors inside and put in policies that work through that reality.”

From a security perspective, the organization’s goal is to create security policies that will define a particular security posture, implement that in the local data centre, and then ensure this posture is intact as the organization moves to a public cloud or a hybrid cloud. While security has typically been hardware-based, and focused on traditional ways of thinking about how traffic moves across physical networks, a software-based policy approach has the advantage of extending across physical, virtual and cloud platforms. In developing multi-platform security for customers, VMware often works with partners, such as Palo Alto Networks, CheckPoint, and Fortinet, which provide advanced security in private data centres but can extend that capability into the public cloud since the underpinning of the solution is software defined networking. According to Near, “The next generation [infrastructure] is focused much more on policies and automation to ensure that you can put that policy across everything you do. From that perspective, VMware and our next-gen security partners are best friends because if we want to solve a customer’s entire networking and security business problem, the best way to do it is to work together with concepts around “change how you think about doing this” to make it [networking] manageable.”

Ultimately, Near observed that in the current and near term, support for any application will be made up of physical network aspects and software defined aspects, and a lot of companies will continue to invest in perimeter security consisting of high end firewalls and high end routers to ensure performance and security. However, automated deployment of security policy across hybrid platforms delivered by SDN can improve security – as well as productivity. With VMware’s NSX solution, for example, new security policies can be automatically applied out to all the different services that IT is providing, a clear benefit when the same internal service is offered multiple times – as in the case, for example, of a secure development platform for the development team. According to Near, with the security “‘must haves’ automated and ready to go, you really improve speed and time to market for the applications and business initiatives you might be developing. When a business is looking to deploy a game changing application out to their customers, and they go to IT and ask how long is this going to take, the answer can’t be ‘let me take a look at it and get back to you in a couple of months once we have done an analysis of security and deployment’. The answer needs to be ‘right away!’” So, the answer must be today, it has to be secure, and software defined networking has to extend out to the cloud so that the business can take advantage of all the flexibility, agility and economic benefits offered by cloud.

If awareness of SDN advantages have been building for some time, Near noted that “the concept of software defined networking represents a change that people need to become comfortable with through real world successes.” SDN as a concept has had significant uptake in the US and many Canadian organizations are now making the shift. Near believes major change is underway: “The tide is turning. In Canada, I see 2017 as a pivotal year for SDN with significant customer mind-shift happening right now in-country and a doubling of the number of Canadian NSX implementations annually. We are now building out several certifications – government and military certifications in NSX – and in other areas such as PCI compliance, certifications are emerging that can only be met with software defined networking” – independent validations that should deliver the real-world examples needed to drive next generation cloud networking.