Enterprise IT management has never been an easy task and, despite the best of intentions, cloud computing has yet to make life any simpler for systems operators and managers. The global scale of modern networks, the rapid pace of technology advancement and the requirements for application agility and resilience has resulted in more challenges for both IT customers and their providers. The introduction of cloud native computing is another complicating factor since it replaces monolithic applications with potentially large numbers of software components.

One of the keys to success with cloud management is automation. Activities that traditionally have required human intervention – operations, administration, maintenance and provisioning – do not easily scale to global-scale realtime networks. Application and infrastructure moves, adds, and changes must be automated, security must be ‘by design’, and failure resilience must be accommodated. This is where Kubernetes, a Google-sponsored open source orchestration platform, can play a role as a good option for application orchestration and resource management.

As a start, it is important to understand what Kubernetes can do, where it fits into the cloud ecosystem and why it is needed. And the ultimate question – is Kubernetes is the “final answer” for cloud native applications management? – may help guide deployment decisions.

The cloud native landscape

Cloud computing has progressed from its early days as a shadow IT application and the more recent ‘lifting and shifting’ of legacy applications to more complex, cloud native hybrid and multi-cloud solutions. In this context, native usually refers to ‘born in the cloud’ technologies such as microservices, containers (Docker and others), service meshes (e.g., Linkerd and Istio) and serverless functions. Cloud native computing is emerging as a designator of new levels of cloud maturity.

The Cloud Native Computing Foundation (CNCF) describes cloud native computing as the use of “an open source software stack to deploy applications as microservices, packaging each part into its own container, and dynamically orchestrating those containers to optimize resource utilization.” The microservices architecture is a key driver of cloud native systems. The microservices architecture constructs complex distributed applications out of sets of loosely-coupled software components (i.e., microservices). These microservices are hosted in containers that are deployed as a form of operating system virtualization. Container platforms support packaging, deploying, orchestrating and executing microservices and relieve the application developer from the need to acquire, configure and manage the software execution and infrastructure services.

Despite the terminology, cloud native services are not limited to cloud; they can be deployed in public and private clouds, but also in edge computing nodes and in Internet of Things environments.

Cloud native with Kubernetes

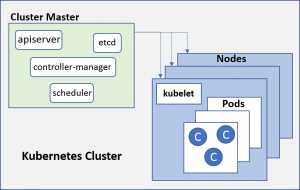

The basic elements of a Kubernetes cluster are illustrated in Figure 1. A cluster is a specified set of physical or virtual nodes that are controlled by a cluster manager. Each node hosts one of more pods, and each pod includes one or more containers. Basic Kubernetes functions include initial creation and placement of containers; scheduling, monitoring, scaling and updating container instances; and managing the deployment of infrastructure elements such as load balancers, firewalls, virtual networks and logging. Kubernetes can be used to automate scalability and resilience and is extensible to allow the addition of new functions. Although Kubernetes supports multiple types of container, Docker is the most commonly known. Open source standards for container runtime and container image specifications are being developed by the Open Container Initiative.

Kubernetes implementation patterns vary significantly. The most basic is a customer do-it-yourself implementation using open source code. Vendor-supported packages (such as Red Hat OpenShift) include software support and may include extensions to help differentiate the products. One example of an extension is the recently announcement of Red Hat CodeReady Workspaces, which is advertised as “a Kubernetes-native, browser-based development environment that enables smoother collaboration across the development team.” Major cloud providers now offer containers-as-a-service and managed Kubernetes including Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKS), Amazon Elastic Container Service for Kubernetes (Amazon EKS), IBM Cloud Kubernetes Service and Oracle Cloud Infrastructure Container Engine for Kubernetes.

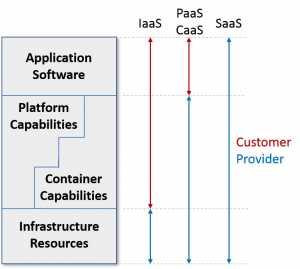

The roles and responsibilities of the customer and the provider in Kubernetes management vary by service model (see Figure 2). In vendor-based, SaaS configurations, use of Kubernetes may not even be visible to the customer, whereas in an IaaS environment the customer owns and operates both the applications and the container platform.

The RightScale 2019 State of the Cloud Report indicates that Kubernetes use is increasing, up from 27 percent in 2018 to 48 percent.

Where does Kubernetes fit?

Various acronyms have been used to describe different views of IT management systems including FCAPS (fault, configuration, accounting, performance and security), ITIL or ITSM standard processes, OAM&P (operations, administration, maintenance and provisioning) and DCIM (data center infrastructure management). None of these were built with cloud native computing in mind, so extending these frameworks to address cloud computing, edge computing, software execution platforms, highly distributed applications and software-defined networks is a key challenge for designers, especially given the increasing need for a holistic approach to management automation.

While managing containers and cloud native systems adds new processes and activities, it does not eliminate the traditional need for management functions. Examples of new requirements include managing relationships with cloud providers individually and in combination (e.g., differences in SLAs, pricing, roles and responsibilities, etc.), managing service subscriptions and configurations (e.g., dynamic scaling of usage and users, etc.), managing data security and privacy and managing performance.

Gartner’s Magic Quadrant for Cloud Management Platforms (CMPs) identifies seven key management functional areas: provisioning and orchestration; service request management; inventory and classification; monitoring and analytics; cost management and resource optimization; cloud migration, backup and disaster recovery; and identity, security and compliance. Kubernetes and its various extension projects are forming the building blocks for a general solution for cloud management. For example, KubeVirt is a virtual machine management add-on for Kubernetes, aimed at allowing users to run VMs alongside containers in Kubernetes clusters. Recent announcements from Rancher Labs takes Kubernetes even further – its new k3OS is billed as an operating system that is completely managed by Kubernetes and k3S is a lightweight version of Kubernetes that can run on edge systems such as the Raspberry Pi.

Benefits of adopting Kubernetes

Kubernetes, or an equivalent, is a must have for any large multi-container application. With containers, developers have standard execution environments, can choose programming languages to suit each microservice and can move application components from one system to another, such as from a local PC to an AWS server. This complex scenario can be compared to how virtual machines operate today, with each having its own operating system.

According to IT analyst and cloud native expert Janakiram MSV, Kubernetes is an ideal platform for running contemporary workloads designed as cloud native applications. Kubernetes has become the de facto operating system for the cloud in much the same way that Linux is the operating system for processor hardware. As long as developers follow the best practices for microservices, DevOps teams will be able to package and deploy the software in Kubernetes. Kubernetes also unifies the platform for developers, deployers, operators and managers. Kubernetes, combined with an active cloud native community, can be thought of as a source of building blocks. The advantage over other platforms is that different solutions can be constructed without the same limitations that constrict today’s PaaS services; Kubernetes is a foundation on which to build a cloud native PaaS.

Another example of the technical benefits of Kubernetes can be seen in the IBM Cloud Kubernetes Service offering, which features intelligent scheduling, self-healing, horizontal scaling, service discovery and load balancing, automated rollouts and rollbacks and configuration management. The service also offers advanced capabilities for cluster management, container security and isolation policies, the ability to design your own cluster, and integrated operational tools for consistency in deployment.

Since its release in 2014, Kubernetes is proving to be a valuable element in the cloud stack, and has taken the industry by storm. One key Kubernetes advantage is its open source status and wide support; the CNCF claims to have over 375 members including more than a hundred innovative startups. Part of its value lies in the years of practical use the forerunner to Kubernetes got while it was used as an internal Google platform. The implementation choices that are available are a third benefit, including cloud-based managed service offerings, vendor-supported software distributions with extensions, and the open source public code. As Kubernetes evolves into a mature integration and automation platform, it could stimulate a larger digital transformation of IT management.