On the heels of publishing a first book in the Vision to Value series on analytics best practises, the V2V community has launched into its next research segment with a new report, All About the Data: Classification, Integration, Veracity and Trust.

The V2V book, Analytics in a Business Context, is a compilation of insight generated in V2V community research sessions on four issues that are critical to creating a foundation for corporate analytics success: Developing the Analytics Business Case, Problem Solving: The Right Data for the Right Question, Monetizing Data, and Establishing Analytics Within the Organization. Related to business challenges and opportunities associated with data and analytics, these discussion topics were masterfully organized into book format by InsightaaS principal analyst and author Michael O’Neil, which was celebrated at a networking meetup this past February, attended by analytics practitioners and panelists from across the industry spectrum, and headlined by Information Builders CEO Frank Vella.

The V2V book, Analytics in a Business Context, is a compilation of insight generated in V2V community research sessions on four issues that are critical to creating a foundation for corporate analytics success: Developing the Analytics Business Case, Problem Solving: The Right Data for the Right Question, Monetizing Data, and Establishing Analytics Within the Organization. Related to business challenges and opportunities associated with data and analytics, these discussion topics were masterfully organized into book format by InsightaaS principal analyst and author Michael O’Neil, which was celebrated at a networking meetup this past February, attended by analytics practitioners and panelists from across the industry spectrum, and headlined by Information Builders CEO Frank Vella.

About the report

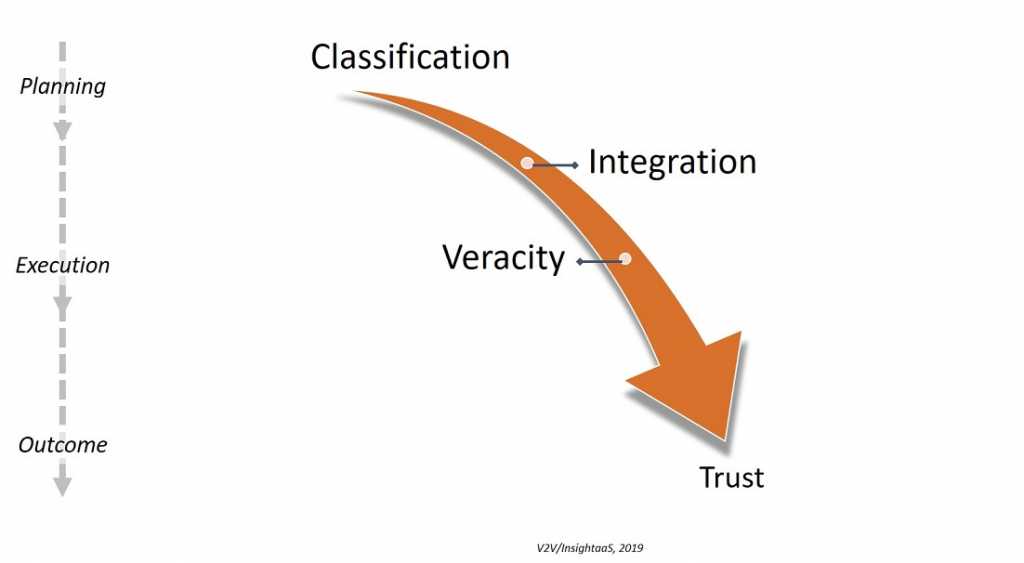

Building on learnings and content developed in this first round of V2V discussions, the next V2V research segment moves from concern with business context to greater focus on advanced management issues. This reorientation is reflected in many of the themes explored in the latest – All About Data – report. For example, riffing on a “think big; start small” approach to data analytics projects, the report advises organizations to develop an overall enterprise plan for mastering data and governance structure that can look forward, envisioning how each projects data set may feed into the next initiative, but build via a succession of discrete, “quick wins” that can support expansion of analytics, while applying standards in data classification to drive integration, veracity and trust. The flow of this approach is outlined in a figure from the report, presented below.

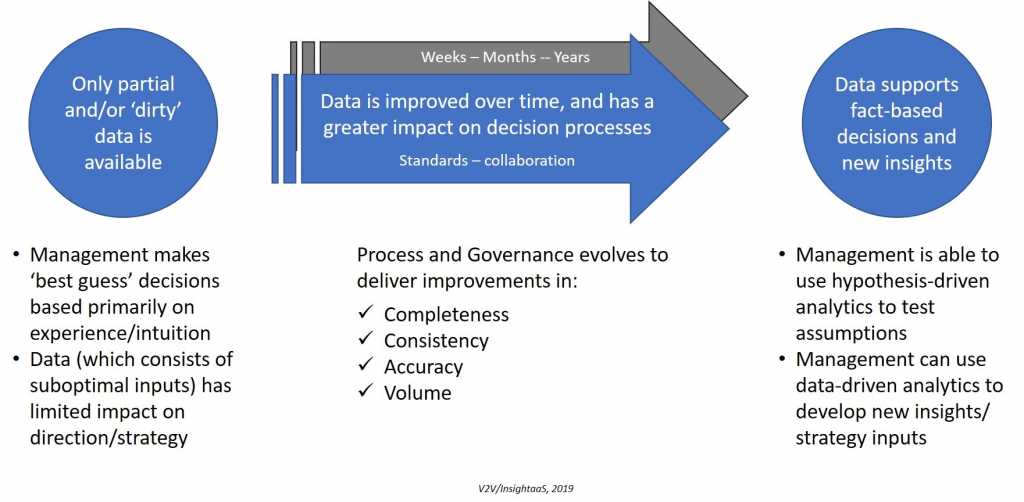

Interestingly, the alignment of technical and business issues is a theme that reverberates in this more technically-oriented report on best practices in data usage and management. As the figure below shows, V2V analytics experts have drawn a firm line between partial/“dirty” data and poor business decision-making, data improvements and better decision processes, and trusted data and the scientific testing of hypotheses/the use of data-driven analytics to drive new insights. For more information on the path from dirty to trusted data, InsightaaS thanks all contributors to the V2V discussions, and asks those interested in data management processes to access the full report.

Launch event

To showcase best practice activities in the Canadian context as they relate to topics discussed in the report, V2V organizers invited the City of Brampton to present on its digital strategy at a Meetup hosted by V2V sponsor Information Builders at its Toronto location. Representing Brampton. senior manager of Digital Innovation and Information Technology Marie Savitha delivered a talk on Transforming a City – One Data Point at a Time. According to Savitha, Brampton’s digital journey begins with a 2040 Vision Document, which identified security, privacy, digital transformation, transparency/accountability and engagement with the customer as focus areas for the city. Specific initiatives for 2019 that fall under these five pillars include a modernized contact centre, one system for 311, citizen engagement through multi-channels, a 360-degree view of the citizen profile (consumers of city services), GIS integration through a GeoHub, support for improved departmental transparency, accountability and operational efficiencies through the use of data-dependent dashboards, and corporate-wide performance reporting through KPIs on departmental delivery. Within that vision, the city has also articulated its Digital and Technology Strategy (2019-2023), a “think big” plan designed to support these activities by addressing key data challenges, including the need for more clearly defined roles in data management, greater data accuracy and a single version of the truth, what Savitha called a “data lake as opposed to data swamps,” a robust, open data program hosted on a geographic spatial information hub platform, developed in partnership with firms, such as Waze, Strava, and Environics, that may be accessed by city administrators and citizens, the flexibility to expand infrastructure through a multi-cloud distributed architecture, the ability to share data with any source and target, and “BI for everybody.”

Brampton has made considerable progress on this journey. According to Savitha, the city has been streamlining data access, and working to ensure data quality. Current successes include a central data repository that houses a single source of truth (the 360 view of citizens engaging with city services), cultural shift through a digital academy aimed at developing data literacy, employee access to HR data, and greater tendency for city managers to make business decisions based on quality data. On the data governance front, the city has established a board that ensures compliance, applies privacy controls at the data source; this group has created role-based access to data, and is now setting up a committee to determine what constitutes open data: what data is suitable for open consumption, when open data is exposed to the consumer, what access controls are in place for personally identifiable data, based on what consent.

Information Builders has been a key partner for the city in this work. As Information Builders account executive Tara Myshrall explained, the company first won an RFP for integrating Brampton’s siloed systems in 2007 with an overall goal of creating real time business automation. By 2010, partners in the journey began to address data quality issues through IB’s master data management solutions (first for property data); by 2015, usage challenges with a Business Objects (an SAP BI solution) platform prompted the city to move to an Information Builders delivery platform. In her presentation on work with Brampton, Myshrall outlined the creation of IB dashboards for several city departments – the “quick wins” that can demonstrate the value in the big picture enterprise data strategy. In the presentation, Myshrall described a ‘heat map’ dashboard showing geo location of incidents for the by-law enforcement department and other data views; the full complement of Brampton dashboards includes portals built to deliver readily assessable information for executive management, for maintenance departments, for enforcement, for fire, transport, and other city operations.

Panel discussion

Following the Brampton case study presentation, a panel composed of analytics experts from the consulting and supplier communities brought their own experiences working in the field to bear on questions posed by panel moderator Michael O’Neil. On the “think big; act small” theme, Stephanie Davis, manager, cyber risk practice, Deloitte Canada, noted that it’s always important to begin with a plan, and that aligning activity with business needs will determine what discrete projects to begin with, while Prasanna Gunasekara, partner at SmartProz described the evolution of a plan by a regional government through a series of projects aimed at better managing and using data generated by a SCADA system in the municipality’s water plant. Mitchell Ogilvie, solutions architect, Information Builders added that the size of organization will have an impact on “think Big, start small” directive: large organizations typically need to “Go Big,” he explained, but will often need a quick win to justify the next projects. Successive projects lead to the scale up of trust, budget, scope, though it’s also good to have a grand vision and execute against it. According to Michael Proulx, director, research products-mining, Sustainalytics, while “think big; start small” is generally a good approach, it may not work in the mining industry, where ES&G (environmental, social and governance) projects often take on a sense of urgency. The Sustainalytics environmental analytics consultancy works with two constituencies – investors that think big, and deploy small; and end user companies that would take the reverse approach, driving culture change with small project completions.

On weighing the importance of data classification, integration, veracity and trust to execution against big strategy, Gunasekara observed wryly that the “biggest integrator continues to be MS Excel,” but stressed that integration is essential to bringing together various systems, and various lines of business in a city. Data integration provides a foundation for building a complete, holistic operational picture; an Excel sheet appeals to individual users because it can be created quickly, but Excel-resident data is inherently siloed, while integrated data from core business systems supports operational workflows. Ogilvie added that it’s important for organizations to integrate using tools, such as master data management, that can deliver on other data requirements, while for Proulx, classification of data the starting point as this establishes trust with users. In her comments on AI, Davis echoed this focus on trust: “it comes down to our society’s level of trust – if that is not there, AI, etc. will not work, and it’s especially important on ML (machine learning) for risk management in cyber security.”

On the question of governance, and what the ideal team looks like, panelists agreed that a multi-perspective approach that can speak across business and technical functionality is critical. According to Davis, governance roles are aligned both vertically, ranging from strategic to execution-focused professionals, and horizontally, as application and business owners, data and privacy SMEs are critical; however, in each role, including that of the Chief Data Officer, a combination of technical depth and soft skills is needed. From a technical perspective, she argued for the interrelationship of data quality and governance – for data to be useful and timely, it’s important to know where the data come from, and whether it is accurate after its been translated. In Proulx’s view, a CDO will need familiarity with data and statistics and soft skills including leadership, as this individual will have to connect with C-suite executives, and be a systems thinker that can apply analytics across the organization. Data quality and governance issues, he added, can be informed by standards developed by organizations such as NUSAP, which have established criteria for evaluating metrics (in the case of sustainability metrics). Ogilvie, too, spoke to roles in the establishment of governance: the business owner is typically responsible for establishing governance principles, while data stewards will enforce the right structure, and IT will act as data custodian, using various technics to execute governance through measures to establish data security, privacy and quality. Borrowing from the UK-based DAMA association, he described the six key data quality metrics as completeness, uniqueness, timeliness, validity, accuracy and consistency.

While quality and governance discussions may appear academic, the impact of good practice can be significant – “data quality is based on value,” Gunasekara concluded. Working with the City of London’s fire department, he was able to identify 18 different people categories that are at higher risk of fire risk. When these groups were mapped to the location of 10 years worth of fires, and this data was used to inform the department’s fire prevention marketing campaign, fires were reduced by 20 percent in year one – a single data point that justifies ongoing investments in analytics.

Obtaining the report

All About Data: Classification, Integration, Veracity and Trust is available at no charge to professionals who would like to understand how to align data classification, integration veracity and trust within their organizations. Please follow this link to reach the download request form.